Intro¶

We startup by reusing parts of 01_the_machine_learning_landscape.ipynb from Géron [GITHOML]. So we begin with what Géron says about life satisfactions vs GDP per capita.

Halfway down this notebook, a list of questions for SWMAL is presented.

Chapter 1 – The Machine Learning landscape¶

This is the code used to generate some of the figures in chapter 1.

Setup¶

First, let's make sure this notebook works well in both python 2 and 3, import a few common modules, ensure MatplotLib plots figures inline and prepare a function to save the figures:

# To support both python 2 and python 3

from __future__ import division, print_function, unicode_literals

# Common imports

import numpy as np

import os

# to make this notebook's output stable across runs

np.random.seed(42)

# To plot pretty figures

%matplotlib inline

import matplotlib

import matplotlib.pyplot as plt

plt.rcParams['axes.labelsize'] = 14

plt.rcParams['xtick.labelsize'] = 12

plt.rcParams['ytick.labelsize'] = 12

# Where to save the figures

PROJECT_ROOT_DIR = "."

CHAPTER_ID = "fundamentals"

def save_fig(fig_id, tight_layout=True):

path = os.path.join(PROJECT_ROOT_DIR, "images", CHAPTER_ID, fig_id + ".png")

print("IGNORING: Saving figure", fig_id) # SWMAL: I've disabled saving of figures

#if tight_layout:

# plt.tight_layout()

#plt.savefig(path, format='png', dpi=300)

# Ignore useless warnings (see SciPy issue #5998)

import warnings

warnings.filterwarnings(action="ignore", module="scipy", message="^internal gelsd")

print("OK")

Code example 1-1¶

This function just merges the OECD's life satisfaction data and the IMF's GDP per capita data. It's a bit too long and boring and it's not specific to Machine Learning, which is why I left it out of the book.

def prepare_country_stats(oecd_bli, gdp_per_capita):

oecd_bli = oecd_bli[oecd_bli["INEQUALITY"]=="TOT"]

oecd_bli = oecd_bli.pivot(index="Country", columns="Indicator", values="Value")

gdp_per_capita.rename(columns={"2015": "GDP per capita"}, inplace=True)

gdp_per_capita.set_index("Country", inplace=True)

full_country_stats = pd.merge(left=oecd_bli, right=gdp_per_capita,

left_index=True, right_index=True)

full_country_stats.sort_values(by="GDP per capita", inplace=True)

remove_indices = [0, 1, 6, 8, 33, 34, 35]

keep_indices = list(set(range(36)) - set(remove_indices))

return full_country_stats[["GDP per capita", 'Life satisfaction']].iloc[keep_indices]

print("OK")

The code in the book expects the data files to be located in the current directory. I just tweaked it here to fetch the files in datasets/lifesat.

import os

datapath = os.path.join("../datasets", "lifesat", "")

# NOTE: a ! prefix makes us able to run system commands..

# (command 'dir' for windows, 'ls' for Linux or Macs)

#

! dir

print("\nOK")

# Code example

import matplotlib

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import sklearn.linear_model

# Load the data

try:

oecd_bli = pd.read_csv(datapath + "oecd_bli_2015.csv", thousands=',')

gdp_per_capita = pd.read_csv(datapath + "gdp_per_capita.csv",thousands=',',delimiter='\t',

encoding='latin1', na_values="n/a")

except Exception as e:

print(f"SWMAL NOTE: well, you need to have the 'datasets' dir in path, please unzip 'datasets.zip' and make sure that its included in the datapath='{datapath}' setting in the cell above..")

raise e

# Prepare the data

country_stats = prepare_country_stats(oecd_bli, gdp_per_capita)

X = np.c_[country_stats["GDP per capita"]]

y = np.c_[country_stats["Life satisfaction"]]

# Visualize the data

country_stats.plot(kind='scatter', x="GDP per capita", y='Life satisfaction')

plt.show()

# Select a linear model

model = sklearn.linear_model.LinearRegression()

# Train the model

model.fit(X, y)

# Make a prediction for Cyprus

X_new = [[22587]] # Cyprus' GDP per capita

y_pred = model.predict(X_new)

print(y_pred) # outputs [[ 5.96242338]]

print("OK")

SWMAL¶

Now we plot the linear regression result.

Just ignore all the data plotter code mumbo-jumbo here (code take dirclty from the notebook, [GITHOML])...and see the final plot.

oecd_bli = pd.read_csv(datapath + "oecd_bli_2015.csv", thousands=',')

oecd_bli = oecd_bli[oecd_bli["INEQUALITY"]=="TOT"]

oecd_bli = oecd_bli.pivot(index="Country", columns="Indicator", values="Value")

#oecd_bli.head(2)

gdp_per_capita = pd.read_csv(datapath+"gdp_per_capita.csv", thousands=',', delimiter='\t',

encoding='latin1', na_values="n/a")

gdp_per_capita.rename(columns={"2015": "GDP per capita"}, inplace=True)

gdp_per_capita.set_index("Country", inplace=True)

#gdp_per_capita.head(2)

full_country_stats = pd.merge(left=oecd_bli, right=gdp_per_capita, left_index=True, right_index=True)

full_country_stats.sort_values(by="GDP per capita", inplace=True)

#full_country_stats

remove_indices = [0, 1, 6, 8, 33, 34, 35]

keep_indices = list(set(range(36)) - set(remove_indices))

sample_data = full_country_stats[["GDP per capita", 'Life satisfaction']].iloc[keep_indices]

#missing_data = full_country_stats[["GDP per capita", 'Life satisfaction']].iloc[remove_indices]

sample_data.plot(kind='scatter', x="GDP per capita", y='Life satisfaction', figsize=(5,3))

plt.axis([0, 60000, 0, 10])

position_text = {

"Hungary": (5000, 1),

"Korea": (18000, 1.7),

"France": (29000, 2.4),

"Australia": (40000, 3.0),

"United States": (52000, 3.8),

}

for country, pos_text in position_text.items():

pos_data_x, pos_data_y = sample_data.loc[country]

country = "U.S." if country == "United States" else country

plt.annotate(country, xy=(pos_data_x, pos_data_y), xytext=pos_text,

arrowprops=dict(facecolor='black', width=0.5, shrink=0.1, headwidth=5))

plt.plot(pos_data_x, pos_data_y, "ro")

#save_fig('money_happy_scatterplot')

plt.show()

from sklearn import linear_model

lin1 = linear_model.LinearRegression()

Xsample = np.c_[sample_data["GDP per capita"]]

ysample = np.c_[sample_data["Life satisfaction"]]

lin1.fit(Xsample, ysample)

t0 = 4.8530528

t1 = 4.91154459e-05

sample_data.plot(kind='scatter', x="GDP per capita", y='Life satisfaction', figsize=(5,3))

plt.axis([0, 60000, 0, 10])

M=np.linspace(0, 60000, 1000)

plt.plot(M, t0 + t1*M, "b")

plt.text(5000, 3.1, r"$\theta_0 = 4.85$", fontsize=14, color="b")

plt.text(5000, 2.2, r"$\theta_1 = 4.91 \times 10^{-5}$", fontsize=14, color="b")

#save_fig('best_fit_model_plot')

plt.show()

print("OK")

Ultra-brief Intro to the Fit-Predict Interface in Scikit-learn¶

OK, the important lines in the cells above are really just

#Select a linear model

model = sklearn.linear_model.LinearRegression()

# Train the model

model.fit(X, y)

# Make a prediction for Cyprus

X_new = [[22587]] # Cyprus' GDP per capita

y_pred = model.predict(X_new)

print(y_pred) # outputs [[ 5.96242338]]

What happens here is that we create model, called LinearRegression (for now just a 100% black-box method), put in our data training $\mathbf{X}$ matrix and corresponding desired training ground thruth vector $\mathbf{y}$ (aka $\mathbf{y}_{true})$, and then train the model.

After training we extract a predicted $\mathbf{y}_{pred}$ vector from the model, for some input scalar $x$=22587.

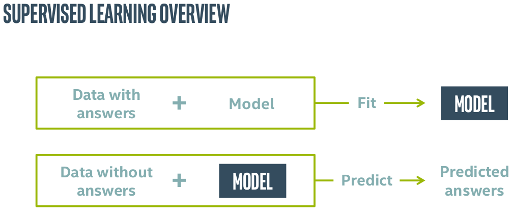

Supervised Training via Fit-predict¶

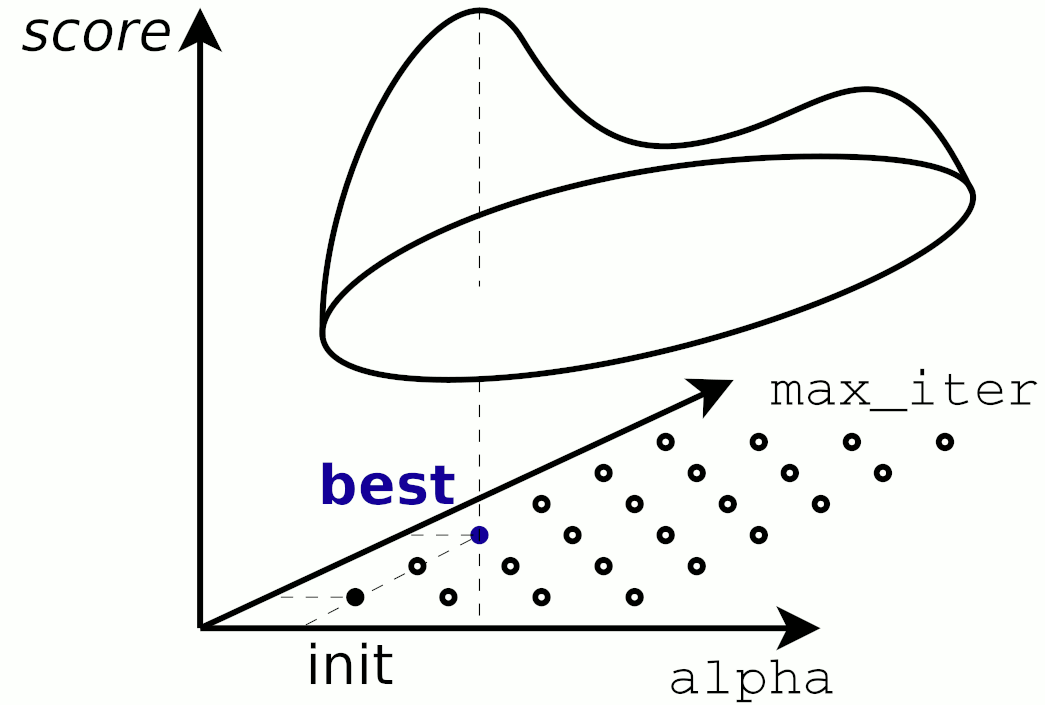

The train-predict (or train-fit) process on some data can be visualized as

In this figure the untrained model is a sklearn.linear_model.LinearRegression python object. When trained via model.fit(), using some know answers for the data, $\mathbf{y}_{true}~$, it becomes a blue-boxed trained model.

The trained model can be used to predict values from new, yet-unseen, data, via the model.predict() function.

In other words, how high is life-satisfaction for Cyprus' GDP=22587 USD?

Just call model.predict() on a matrix with one single numerical element, 22587, well, not a matrix really, but a python list-of-lists, [[22587]]

y_pred = model.predict([[22587]])

Apparently 5.96 the models answers!

(you get used to the python built-in containers and numpy on the way..)

Qa) The $\theta$ parameters and the $R^2$ Score¶

Géron uses some $\theta$ parameter from this linear regression model, in his examples and plots above.

How do you extract the $\theta_0$ and $\theta_1$ coefficients in his life-satisfaction figure form the linear regression model, via the models python attributes?

Read the documentation for the linear regressor at

http://scikit-learn.org/stable/modules/generated/sklearn.linear_model.LinearRegression.html

Extract the score=0.734 for the model using data (X,y) and explain what $R^2$ score measures in broad terms

$$ \begin{array}{rcll} R^2 &=& 1 - u/v\\ u &=& \sum (y_{true} - y_{pred}~)^2 ~~~&\small \mbox{residual sum of squares}\\ v &=& \sum (y_{true} - \mu_{true}~)^2 ~~~&\small \mbox{total sum of squares} \end{array} $$

with $y_{true}~$ being the true data, $y_{pred}~$ being the predicted data from the model and $\mu_{true}~$ being the true mean of the data.

What are the minimum and maximum values for $R^2~$?

Is it best to have a low $R^2$ score or a high $R^2$ score? This means, is $R^2$ a loss/cost function or a function that measures of fitness/goodness?

NOTE$_1$: the $R^2$ is just one of many scoring functions used in ML, we will see plenty more other methods later.

NOTE$_2$: there are different definitions of the $R^2$, 'coefficient of determination', in linear algebra. We stricly use the formulation above.

OPTIONAL: Read the additional in-depth literature on $R^2~$:

# TODO: add your code here..

assert False, "TODO: solve Qa, and remove me.."

The Merits of the Fit-Predict Interface¶

Now comes the really fun part: all methods in Scikit-learn have this fit-predict interface, and you can easily interchange models in your code just by instantiating a new and perhaps better ML model.

There are still a lot of per-model parameters to tune, but fortunately, the built-in default values provide you with a good initial guess for good model setup.

Later on, you might want to go into the parameter detail trying to optimize some params (opening the lid of the black-box ML algo), but for now, we pretty much stick to the default values.

Let's try to replace the linear regression now, let's test a k-nearest neighbour algorithm instead (still black boxed algorithm-wise)...

Qb) Using k-Nearest Neighbors¶

Change the linear regression model to a sklearn.neighbors.KNeighborsRegressor with k=3 (as in [HOML:p.22,bottom]), and rerun the fit and predict using this new model.

What do the k-nearest neighbours estimate for Cyprus, compared to the linear regression (it should yield=5.77)?

What score-method does the k-nearest model use, and is it comparable to the linear regression model?

Seek out the documentation in Scikit-learn, if the scoring methods are not equal, can they be compared to each other at all then?

Remember to put pointer/text from the Sckikit-learn documentation in the journal...(did you find the right kNN model etc.)

# this is our raw data set:

sample_data

# and this is our preprocessed data

country_stats

# Prepare the data

X = np.c_[country_stats["GDP per capita"]]

y = np.c_[country_stats["Life satisfaction"]]

print("X.shape=",X.shape)

print("y.shape=",y.shape)

# Visualize the data

country_stats.plot(kind='scatter', x="GDP per capita", y='Life satisfaction')

plt.show()

# Select and train a model

# TODO: add your code here..

assert False, "TODO: add you instatiation and training of the knn model here.."

# knn = ..

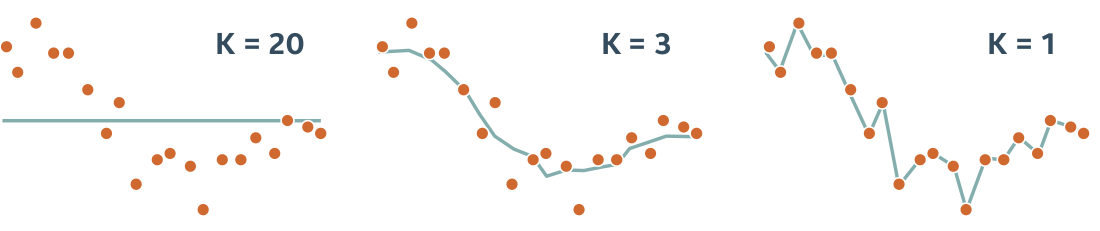

Qc) Tuning Parameter for k-Nearest Neighbors and A Sanity Check¶

But that not the full story. Try plotting the prediction for both models in the same graph and tune the k_neighbor parameter of the KNeighborsRegressor model.

Choosing k_neighbor=1 produces a nice score=1, that seems optimal...but is it really so good?

Plotting the two models in a 'Life Satisfaction-vs-GDP capita' 2D plot by creating an array in the range 0 to 60000 (USD) (the M matrix below) and then predict the corresponding y value will sheed some light to this.

Now reusing the plots stubs below, try to explain why the k-nearest neighbour with k_neighbor=1 has such a good score.

Does a score=1 with k_neighbor=1also mean that this would be the prefered estimator for the job?

Hint here is a similar plot of a KNN for a small set of different k's:

sample_data.plot(kind='scatter', x="GDP per capita", y='Life satisfaction', figsize=(5,3))

plt.axis([0, 60000, 0, 10])

# create an test matrix M, with the same dimensionality as X, and in the range [0;60000]

# and a step size of your choice

m=np.linspace(0, 60000, 1000)

M=np.empty([m.shape[0],1])

M[:,0]=m

# from this test M data, predict the y values via the lin.reg. and k-nearest models

y_pred_lin = model.predict(M)

y_pred_knn = knn.predict(M) # ASSUMING the variable name 'knn' of your KNeighborsRegressor

# use plt.plot to plot x-y into the sample_data plot..

plt.plot(m, y_pred_lin, "r")

plt.plot(m, y_pred_knn, "b")

# TODO: add your code here..

assert False, "TODO: try knn with different k_neighbor params, that is re-instantiate knn, refit and replot.."

Qd) Trying out a Neural Network¶

Let us then try a Neural Network on the data, using the fit-predict interface allows us to replug a new model into our existing code.

There are a number of different NN's available, let's just hook into Scikit-learns Multi-Layer Perceptron for regression, that is an 'MLPRegressor'.

Now, the data-set for training the MLP is really not well scaled, so we need to tweak a lot of parameters in the MLP just to get it to produce some sensible output: with out preprocessing and scaling of the input data, X, the MLP is really a bad choice of model for the job since it so easily produces garbage output.

Try training the mlp regression model below, predict the value for Cyprus, and find the score value for the training set...just as we did for the linear and KNN models.

Can the MLPRegressor score function be compared with the linear and KNN-scores?

from sklearn.neural_network import MLPRegressor

# Setup MLPRegressor

mlp = MLPRegressor( hidden_layer_sizes=(10,), solver='adam', activation='relu', tol=1E-5, max_iter=100000, verbose=True)

mlp.fit(X, y.ravel())

# lets make a MLP regressor prediction and redo the plots

y_pred_mlp = mlp.predict(M)

plt.plot(m, y_pred_lin, "r")

plt.plot(m, y_pred_knn, "b")

plt.plot(m, y_pred_mlp, "k")

# TODO: add your code here..

assert False, "TODO: predict value for Cyprus and fetch the score() from the fitting."

[OPTIONAL] Qe) Neural Network with pre-scaling¶

Now, the neurons in neural networks normally expects input data in the range [0;1] or sometimes in the range [-1;1], meaning that for value outside this range the you put of the neuron will saturate to it's min or max value (also typical 0 or 1).

A concrete value of X is, say 22.000 USD, that is far away from what the MLP expects. To af fix to the problem in Qd) is to preprocess data by scaling it down to something more sensible.

Try to scale X to a range of [0;1], re-train the MLP, re-plot and find the new score from the rescaled input. Any better?

# TODO: add your code here..

assert False, "TODO: try prescale data for the MPL...any better?"

REVISIONS||

:- | :-

2018-12-18| CEF, initial.

2019-01-24| CEF, spell checked and update.

2019-01-30| CEF, removed reset -f, did not work on all PC's.

2019-08-20| CEF, E19 ITMAL update.

2019-08-26| CEF, minor mod to NN exercise.

2019-08-28| CEF, fixed dataset dir issue, datapath"../datasets" changed to "./datasets".

2020-01-25| CEF, F20 ITMAL update.

2020-08-06| CEF, E20 ITMAL update, minor fix of ls to dir and added exception to datasets load, udpated figs paths.

2020-09-24| CEF, updated text to R2, Qa exe.

2020-09-28| CEF, updated R2 and theta extraction, use python attributes, moved revision table. Added comment about MLP.

2021-01-12| CEF, updated Qe.

2021-02-08| CEF, added ls for Mac/Linux to dir command cell.

2021-08-02| CEF, update to E21 ITMAL.

2021-08-03| CEF, fixed ref to p21 => p.22.

2022-01-25| CEF, update to F22 SWMAL.

2022-08-30| CEF, update to v1 changes.

2023-08-30| CEF, minor table update for.

Python Basics¶

Modules and Packages in Python¶

Reuse of code in Jupyter notebooks can be done by either including a raw python source as a magic command

%load filename.py

but this just pastes the source into the notebook and creates all kinds of pains regarding code maintenance.

A better way is to use a python module. A module consists simply (and pythonic) of a directory with a module init file in it (possibly empty)

libitmal/__init__.py

To this directory you can add modules in form of plain python files, say

libitmal/utils.py

That's about it! The libitmal file tree should now look like

libitmal/

├── __init__.py

├── __pycache__

│ ├── __init__.cpython-36.pyc

│ └── utils.cpython-36.pyc

├── utils.py

with the cache part only being present once the module has been initialized.

You should now be able to use the libitmal unit via an import directive, like

import numpy as np

from libitmal import utils as itmalutils

print(dir(itmalutils))

print(itmalutils.__file__)

X = np.array([[1,2],[3,-100]])

itmalutils.PrintMatrix(X,"mylabel=")

itmalutils.TestAll()

Qa Load and test the libitmal module¶

Try out the libitmal module from [GITMAL]. Load this module and run the function

from libitmal import utils as itmalutils

itmalutils.TestAll()

from this module.

Implementation details¶

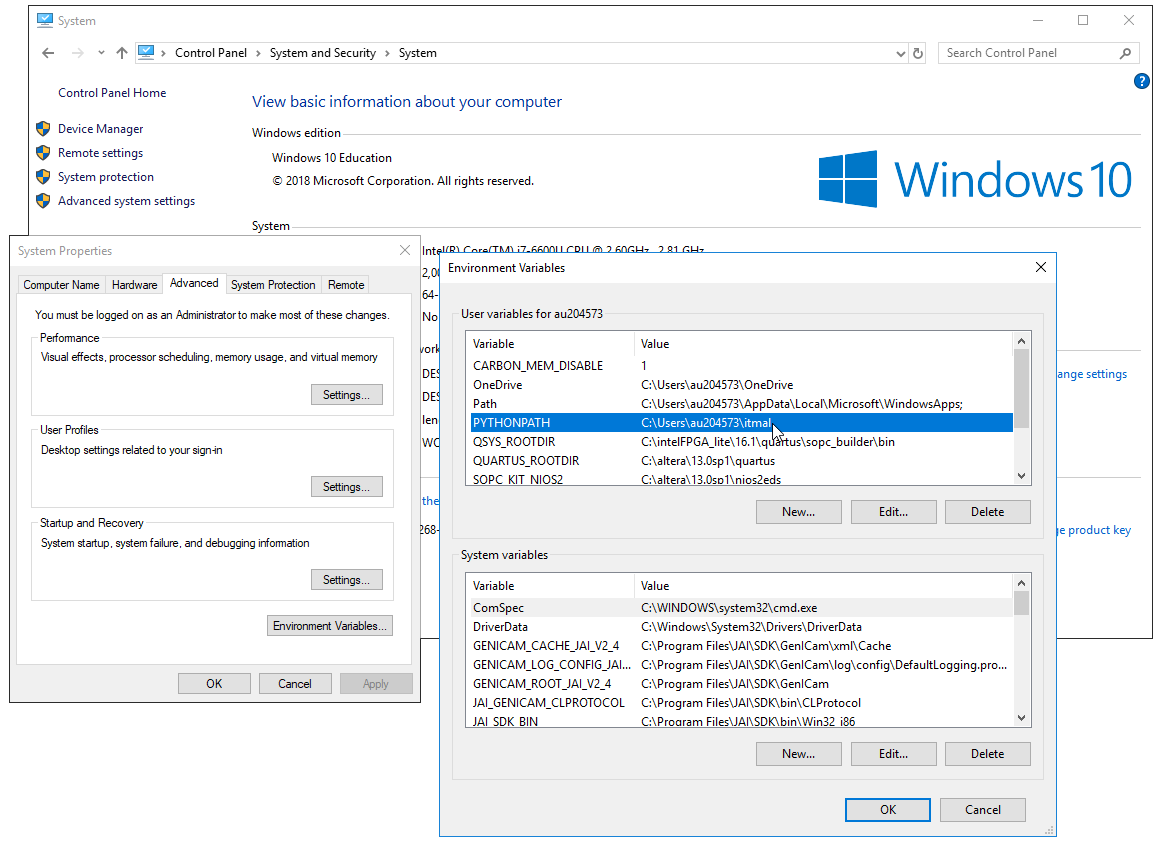

Note that there is a python module include search path, that you may have to investigate and modify. For my Linux setup I have an export or declare statement in my .bashrc file, like

declare -x PYTHONPATH=~/ASE/ML/itmal:$PYTHONPATH

but your itmal, the [GITMAL] root dir, may be placed elsewhere.

For Windows, you have to add PYTHONPATH to your user environment variables...see screenshot below (enlarge by modding the image width-tag or find the original png in the Figs directory).

or if you, like me, hate setting up things in a GUI, and prefer a console, try in a CMD on windows

CMD> setx.exe PYTHONPATH "C:\Users\auXXYYZZ\itmal"

replacing the username and path with whatever you have. Note that some Windows installations have various security settings enables, so that running setx.exe fails. Setting up a MAC should be similar to Linux; just modify your PYTHONPATH setting (still to be proven correct?, CEF).

If everything fails you could programmatically add your path to the libitmal directory as

import sys,os

sys.path.append(os.path.expanduser('~/itmal'))

from libitmal import utils as itmalutils

print(dir(itmalutils))

print(itmalutils.__file__)

For the journal: remember to document your particular PATH setup.

# TODO: Qa...

Qb Create your own module, with some functions, and test it¶

Now create your own module, with some dummy functionality. Load it and run you dummy function in a Jupyter Notebook.

Keep this module at hand, when coding, and try to capture reusable python functions in it as you invent them!

For the journal: remember to document your particular library setup (where did you place files, etc).

# TODO: Qb...

Qc How do you 'recompile' a module?¶

When changing the module code, Jupyter will keep running on the old module. How do you force the Jupyter notebook to re-load the module changes?

NOTE: There is a surprising issue regarding module reloads in Jupyter notebooks. If you use another development framework, like Spyder or Visual Studio Code, module reloading works out-of-the-box.

# TODO: Qc...

[OPTIONAL] Qd Write a Howto on Python Modules a Packages¶

Write a short description of how to use modules in Python (notes on modules path, import directives, directory structure, etc.)

# TODO: Qd...

Classes in Python¶

Good news: Python got classes. Bad news: they are somewhat obscure compared to C++ classes.

Though we will not use object-oriented programming in Python intensively, we still need some basic understanding of Python classes. Let's just dig into a class-demo, here is MyClass in Python

class MyClass:

def myfun(self):

self.myvar = "blah" # NOTE: a per class-instance variable.

print(f"This is a message inside the class, myvar={self.myvar}.")

myobjectx = MyClass()

NOTE: The following exercise assumes some C++ knowledge, in particular the OPRG and OOP courses. If you are an EE-student, or from another Faculty, then ignore the cryptic C++ comments, and jump directly to some Python code instead. It's the Python solution here, that is important!

Qe Extend the class with some public and private functions and member variables¶

How are private function and member variables represented in python classes?

What is the meaning of self in python classes?

What happens to a function inside a class if you forget self in the parameter list, like def myfun(): instead of def myfun(self): and you try to call it like myobjectx.myfun()? Remember to document the demo code and result.

[OPTIONAL] What does 'class' and 'instance variables' in python correspond to in C++? Maybe you can figure it out, I did not really get it reading, say this tutorial

# TODO: Qe...

Qf Extend the class with a Constructor¶

Figure a way to declare/define a constructor (CTOR) in a python class. How is it done in python?

Is there a class destructor in python (DTOR)? Give a textual reason why/why-not python has a DTOR?

Hint: python is garbage collection like in C#, and do not go into the details of __del__, ___enter__, __exit__ functions...unless you find it irresistible to investigate.

# TODO: Qf...

Qg Extend the class with a to-string function¶

Then find a way to serialize a class, that is to make some tostring() functionality similar to a C++

friend ostream& operator<<(ostream& s,const MyClass& x)

{

return os << ..

}

If you do not know C++, you might be aware of the C# way to string serialize

string s=myobject.tostring()

that is a per-class buildin function tostring(), now what is the pythonic way of 'printing' a class instance?

# TODO: Qg...

[OPTIONAL] Qh Write a Howto on Python Classes¶

Write a How-To use Classes Pythonically, including a description of public/privacy, constructors/destructors, the meaning of self, and inheritance.

# TODO: Qh...

Administration¶

REVISIONS||

:- | :- |

2018-12-19| CEF, initial.

2018-02-06| CEF, updated and spell checked.

2018-02-07| CEF, made Qh optional.

2018-02-08| CEF, added PYTHONPATH for windows.

2018-02-12| CEF, small mod in itmalutils/utils.

2019-08-20| CEF, E19 ITMAL update.

2020-01-25| CEF, F20 ITMAL update.

2020-08-06| CEF, E20 ITMAL update, udpated figs paths.

2020-09-07| CEF, added text on OPRG and OOP for EE's

2020-09-29| CEF, added elaboration for journal in Qa+b.

2021-02-06| CEF, fixed itmalutils.TestAll() in markdown cell.

2021-08-02| CEF, update to E21 ITMAL.

2022-01-25| CEF, update to F22 SWMAL.

2022-02-25| CEF, elaborated on setx.exe on Windows and MAC PYTHONPATH.

2022-08-30| CEF, updated to v1 changes.

2022-09-16| CEF, added comment on module reloading when not using notebooks.

2023-08-30| CEF, minor table and text update.

Mathematical Foundation¶

Vector and matrix representation in python¶

Say, we have $d$ features for a given sample point. This $d$-sized feature column vector for a data-sample $i$ is then given by

$$ \newcommand\rem[1]{} \rem{SWMAL: CEF def and LaTeX commands, remember: no newlines in defs} \newcommand\eq[2]{#1 &=& #2\\} \newcommand\ar[2]{\begin{array}{#1}#2\end{array}} \newcommand\ac[2]{\left[\ar{#1}{#2}\right]} \newcommand\st[1]{_{\scriptsize #1}} \newcommand\norm[1]{{\cal L}_{#1}} \newcommand\obs[2]{#1_{\mbox{\scriptsize obs}}^{\left(#2\right)}} \newcommand\diff[1]{\mbox{d}#1} \newcommand\pown[1]{^{(#1)}} \def\pownn{\pown{n}} \def\powni{\pown{i}} \def\powtest{\pown{\mbox{\scriptsize test}}} \def\powtrain{\pown{\mbox{\scriptsize train}}} \def\bX{\mathbf{M}} \def\bX{\mathbf{X}} \def\bZ{\mathbf{Z}} \def\bw{\mathbf{m}} \def\bx{\mathbf{x}} \def\by{\mathbf{y}} \def\bz{\mathbf{z}} \def\bw{\mathbf{w}} \def\btheta{{\boldsymbol\theta}} \def\bSigma{{\boldsymbol\Sigma}} \def\half{\frac{1}{2}} \bx\powni = \ac{c}{ x_1\powni \\ x_2\powni \\ \vdots \\ x_d\powni } $$

or typically written transposed to save as

$$ \bx\powni = \left[ x_1\powni~~ x_2\powni~~ \cdots~~ x_d\powni\right]^T $$

such that $\bX$ can be constructed of the full set of $n$ samples of these feature vectors

$$ \bX = \ac{c}{ (\bx\pown{1})^T \\ (\bx\pown{2})^T \\ \vdots \\ (\bx\pownn)^T } $$

or by explicitly writing out the full data matrix $\bX$ consisting of scalars

$$ \bX = \ac{cccc}{ x_1\pown{1} & x_2\pown{1} & \cdots & x_d\pown{1} \\ x_1\pown{2} & x_2\pown{2} & \cdots & x_d\pown{2}\\ \vdots & & & \vdots \\ x_1\pownn & x_2\pownn & \cdots & x_d\pownn\\ } $$

but sometimes the notation is a little more fuzzy, leaving out the transpose operator for $\mathbf x$ and in doing so just interpreting the $\mathbf{x}^{(i)}$'s to be row vectors instead of column vectors.

The target column vector, $\mathbf y$, also has the dimension $n$

$$ \by = \ac{c}{ y\pown{1} \\ y\pown{2} \\ \vdots \\ y\pownn \\ } $$

Qa Given the following $\mathbf{x}^{(i)}$'s, construct and print the $\mathbf X$ matrix in python.¶

$$ \ar{rl}{ \bx\pown{1} &= \ac{c}{ 1, 2, 3}^T \\ \bx\pown{2} &= \ac{c}{ 4, 2, 1}^T \\ \bx\pown{3} &= \ac{c}{ 3, 8, 5}^T \\ \bx\pown{4} &= \ac{c}{-9,-1, 0}^T } $$

Implementation Details¶

Notice that the np.matrix class is getting deprecated! So, we use numpy's np.array as matrix container. Also, do not use the built-in python lists or the numpy matrix subclass.

# Qa

import numpy as np

y = np.array([1,2,3,4]) # NOTE: you'll need this later

# TODO..create and print the full matrix

assert False, "TODO: solve Qa, and remove me.."

Norms, metrics or distances¶

The $\norm{2}$ Euclidian distance, or norm, for a vector of size $n$ is defined as

$$ \norm{2}:~~ ||\bx||_2 = \left( \sum_{i=1}^{n} |x_i|^2 \right)^{1/2}\\ $$

and the distance between two vectors is given by

$$ \ar{ll}{ \mbox{d}(\bx,\by) &= ||\bx-\by||_2\\ &= \left( \sum_{i=1}^n \left| x_{i}-y_{i} \right|^2 \right)^{1/2} } $$

This Euclidian norm is sometimes also just denoted as $||\bx||$, leaving out the 2 in the subscript.

The squared $\norm{2}$ for a vector can compactly be expressed via

$$ \norm{2}^2: ||\bx||_2^2 = \bx^\top\bx $$

The $\norm{1}$ 'City-block' norm is given by

$$ \norm{1}:~~ ||\bx||_1 = \sum_i |x_i| $$

but $\norm{1}$ is not used as intensive as its more popular $\norm{2}$ cousin.

Notice that $|x|$ in code means fabs(x).

Qb Implement the $\norm{1}$ and $\norm{2}$ norms for vectors in python.¶

First implementation must be a 'low-level'/explicit implementation---using primitive/build-in functions, like +, * and power ** only! The square-root function can be achieved via power like x**0.5.

Do NOT use any methods from libraries, like math.sqrt, math.abs, numpy.linalg.inner, numpy.dot() or similar. Yes, using such libraries is an efficient way of building python software, but in this exercise we want to explicitly map the mathematichal formulaes to python code.

Name your functions L1 and L2 respectively, they both take one vector as input argument.

But test your implementation against some built-in functions, say numpy.linalg.norm

When this works, and passes the tests below, optimize the $\norm{2}$, such that it uses np.numpy's dot operator instead of an explicit sum, call this function L2Dot. This implementation, L2Dot, must be pythonic, i.e. it must not contain explicit for- or while-loops.

# TODO: solve Qb...implement the L1, L2 and L2Dot functions...

assert False, "TODO: solve Qb, and remove me.."

# TEST vectors: here I test your implementation...calling your L1() and L2() functions

tx=np.array([1, 2, 3, -1])

ty=np.array([3,-1, 4, 1])

expected_d1=8.0

expected_d2=4.242640687119285

d1=L1(tx-ty)

d2=L2(tx-ty)

print(f"tx-ty={tx-ty}, d1-expected_d1={d1-expected_d1}, d2-expected_d2={d2-expected_d2}")

eps=1E-9

# NOTE: remember to import 'math' for fabs for the next two lines..

assert math.fabs(d1-expected_d1)<eps, "L1 dist seems to be wrong"

assert math.fabs(d2-expected_d2)<eps, "L2 dist seems to be wrong"

print("OK(part-1)")

# comment-in once your L2Dot fun is ready...

#d2dot=L2Dot(tx-ty)

#print("d2dot-expected_d2=",d2dot-expected_d2)

#assert fabs(d2dot-expected_d2)<eps, "L2Ddot dist seem to be wrong"

#print("OK(part-2)")

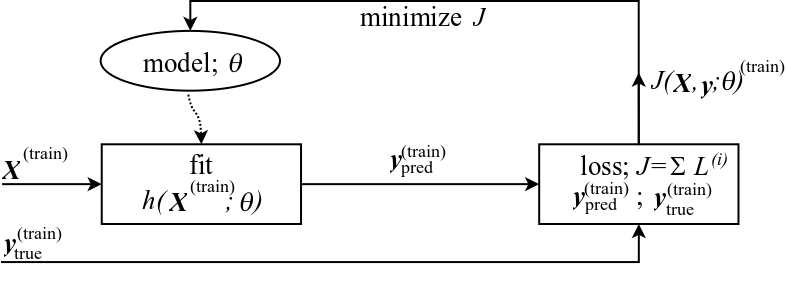

The cost function, $J$¶

Now, most ML algorithm uses norms or metrics internally when doing minimizations. Details on this will come later, but for now we need to know that an algorithm typically tries to minimize a given performance metric, the loss function, for all the input data, and implicitly tries to minimize the sum of all norms for the 'distances' between some predicted output, $y\st{pred}$ and the true output $y\st{true}$, with the distance between these typically given by the $\norm{2}$ norm

$$ \mbox{individual loss:}~~L\powni = \mbox{d}(y\st{pred}\powni,y\st{true}\powni) $$

with $y\st{pred}\powni$, a scalar value, being the output from the hypothesis function, that maps the input vector $\bx\powni$ to a scalar

$$ y_{pred}\powni = \hat{y}\powni = h(\bx\powni;\btheta) $$

and the total loss, $J$ will be the sum over all $i$'s

$$ \ar{rl}{ J &= \frac{1}{n} \sum_{i=1}^{n} L\powni\\ &= \frac{1}{n} \sum_{i=1}^{n} \mbox{d}( h(\bx\powni) , y\powni\st{true}) } $$

Cost function in vector/matrix notation using $\norm{2}$¶

Remember the data-flow model for supervised learning

Let us now express $J$ in terms of vectors and matrices instead of summing over individual scalars, and let's use $\norm{2}$ as the distance function

$$ \ar{rl}{ J(\bX,\by;\btheta) &= \frac{1}{n} \sum_{i=1}^{n} L\powni\\ &= \frac{1}{n}\sum_{i=1}^{n} (h(\bx\powni) - \by\powni\st{true})^2\\ &= \frac{1}{n} ||h(\bX) - \by\st{true} ||_2^2\\ &= \frac{1}{n} ||\by\st{pred} - \by\st{true} ||_2^2\\ } $$

with the matrix-vector notation

$$ \by_{pred} = \hat{\by} = h(\bX;\btheta) $$

Loss or Objective Function using the Mean Squared Error¶

This formulation is equal to the definition of the mean-squared-error, MSE (or indirectly also RMSE), here given in the general formulation for some random variable $Z$

$$ \ar{rl}{ \mbox{MSE} &= \frac{1}{n} \sum_{i=1}^{n} (\hat{Z}_i-Z_i)^2 = \frac{1}{n} SS\\ \mbox{RMSE} &= \sqrt{\mbox{MSE}}\ } $$

with sum-of-squares (SS) is given simply by

$$ \mbox{SS} = \sum_{i=1}^{n} (\hat{Z}_i-Z_i)^2\\ $$

So, using the $\norm{2}$ for the distance metric, is equal to saying that we want to minimize $J$ with respect to the MSE

$$ \ar{rl}{ J &= \mbox{MSE}(h(\bX), \by\st{true}) \\ &= \mbox{MSE}(\by\st{pred}~, \by\st{true}) \\ &= \mbox{MSE}(\hat{\by}, \by\st{true}) } $$

Note: when minimizing one can ignore the constant factor $1/n$ and it really does not matter if you minimize MSE or RMSE. Often $J$ is also multiplied by 1/2 to ease notation when trying to differentiate it.

$$ \ar{rl}{ J(\bX,\by\st{true};\btheta) &\propto \half ||\by\st{pred} - \by\st{true} ||_2^2 \\ &\propto \mbox{MSE} } $$

MSE¶

Now, let us take a look on how you calculate the MSE.

The MSE uses the $\norm{2}$ norm internally, well, actually $||\cdot||^2_2$ to be precise, and basically just sums, means and roots the individual (scalar) losses (distances), we just saw before.

And the RMSE is just an MSE with a final square-root call.

Qc Construct the Root Mean Square Error (RMSE) function (Equation 2-1 [HOML]).¶

Call the function RMSE, and evaluate it using the $\bX$ matrix and $\by$ from Qa.

We implement a dummy hypothesis function, that just takes the first column of $\bX$ as its 'prediction'

$$ h\st{dummy}(\bX) = \bX(:,0) $$

Do not re-implement the $\norm{2}$ for the RMSE function, but call the '''L2''' function you just implemented internally in RMSE.

# TODO: solve Qc...implement your RMSE function here

assert False, "TODO: solve Qc, and remove me.."

# Dummy h function:

def h(X):

if X.ndim!=2:

raise ValueError("excpeted X to be of ndim=2, got ndim=",X.ndim)

if X.shape[0]==0 or X.shape[1]==0:

raise ValueError("X got zero data along the 0/1 axis, cannot continue")

return X[:,0]

# Calls your RMSE() function:

r=RMSE(h(X),y)

# TEST vector:

eps=1E-9

expected=6.57647321898295

print(f"RMSE={r}, diff={r-expected}")

assert fabs(r-expected)<eps, "your RMSE dist seems to be wrong"

print("OK")

MAE¶

Qd Similar construct the Mean Absolute Error (MAE) function (Equation 2-2 [HOML]) and evaluate it.¶

The MAE will algorithmic wise be similar to the MSE part from using the $\norm{1}$ instead of the $\norm{2}$ norm.

Again, re-implementation of the$\norm{1}$ is a no-go, call the '''L1''' instead internally i MAE.

# TODO: solve Qd

assert False, "TODO: solve Qd, and remove me.."

# Calls your MAE function:

r=MAE(h(X), y)

# TEST vector:

expected=3.75

print(f"MAE={r}, diff={r-expected}")

assert fabs(r-expected)<eps, "MAE dist seems to be wrong"

print("OK")

Pythonic Code¶

Robustness of Code¶

Data validity checking is an essential part of robust code, and in Python the 'fail-fast' method is used extensively: instead of lingering on trying to get the 'best' out of an erroneous situation, the fail-fast pragma will be very loud about any data inconsistencies at the earliest possible moment.

Hence robust code should include a lot of error checking, say as pre- and post-conditions (part of the design-by-contract programming) when calling a function: when entering the function you check that all parameters are ok (pre-condition), and when leaving you check the return parameter (post-conditions).

Normally assert-checking or exception-throwing will do the trick just fine, with the exception method being more pythonic.

For the norm-function you could, for instance, test your input data to be 'vector' like, i.e. like

assert x.shape[0]>=0 and x.shape[1]==0

if not x.ndim==1:

raise some error

or similar.

Qe Robust Code¶

Add error checking code (asserts or exceptions), that checks for right $\hat\by$-$\by$ sizes of the MSE and MAE functions.

Also add error checking to all you previously tested L2() and L1() functions, and re-run all your tests.

# TODO: solve Qe...you need to modify your python cells above

assert False, "TODO: solve Qe, and remove me.."

Qf Conclusion¶

Now, conclude on all the exercise above.

Write a short textual conclusion (max. 10- to 20-lines) that extract the essence of the exercises: why did you think it was important to look at these particular ML concepts, and what was our overall learning outcome of the exercises (in broad terms).

# TODO: Qf concluding remarks in text..

REVISIONS||

---------||

2018-12-18| CEF, initial.

2019-01-31| CEF, spell checked and update.

2019-02-04| CEF, changed d1/d2 in Qb to L1/L2. Fixe rev date error.

2019-02-04| CEF, changed headline.

2019-02-04| CEF, changed (.) in dist(x,y) to use pipes instead.

2019-02-04| CEF, updated supervised learning fig, and changed , to ; for thetas, and change = to propto.

2019-02-05| CEF, post lesson update, minor changes, added fabs around two test vectors.

2019-02-07| CEF, updated def section.

2019-09-01| CEF, updated for ITMAL v2.

2019-09-04| CEF, updated for print-f and added conclusion Q.

2019-09-05| CEF, fixed defect in print string and commented on fabs.

2020-01-30| CEF, F20 ITMAL update.

2020-02-03| CEF, minor text fixes.

2020-02-24| CEF, elaborated on MAE and RMSE, emphasized not to use np functionality in L1 and L2.

2020-09-03| CEF, E20 ITMAL update, updated figs paths.

2020-09-06| CEF, added alt text.

2020-09-07| CEF, updated HOML page refs.

2021-01-12| CEF, F21 ITMAL update, moved revision table.

2021-02-09| CEF, elaborated on test-vectors. Changed order of Design Matrix descriptions.

2021-08-02| CEF, update to E21 ITMAL.

2022-01-25| CEF, update to F22 SWMAL.

2022-02-25| CEF, removed inner product equations.

2022-08-30| CEF, updated to v1 changes.

2023-02-07| CEF, minor update for d.

Implementing a dummy binary-classifier with fit-predict interface¶

We begin with the MNIST data-set and will reuse the data loader from Scikit-learn. Next we create a dummy classifier, and compare the results of the SGD and dummy classifiers using the MNIST data...

Qa Load and display the MNIST data¶

There is a sklearn.datasets.fetch_openml dataloader interface in Scikit-learn. You can load MNIST data like

from sklearn.datasets import fetch_openml

# Load data from https://www.openml.org/d/554

X, y = fetch_openml('mnist_784',??) # needs to return X, y, replace '??' with suitable parameters!

# Convert to [0;1] via scaling (not always needed)

#X = X / 255.

but you need to set parameters like return_X_y and cache if the default values are not suitable!

Check out the documentation for the fetch_openml MNIST loader, try it out by loading a (X,y) MNIST data set, and plot a single digit via the MNIST_PlotDigit function here (input data is a 28x28 NMIST subimage)

%matplotlib inline

def MNIST_PlotDigit(data):

import matplotlib

import matplotlib.pyplot as plt

image = data.reshape(28, 28)

plt.imshow(image, cmap = matplotlib.cm.binary, interpolation="nearest")

plt.axis("off")

Finally, put the MNIST loader into a single function called MNIST_GetDataSet() so you can reuse it later.

# TODO: add your code here..

assert False, "TODO: solve Qa, and remove me.."

Qb Add a Stochastic Gradient Decent [SGD] Classifier¶

Create a train-test data-set for MNIST and then add the SGDClassifier as done in [HOML], p.103.

Split your data and run the fit-predict for the classifier using the MNIST data.(We will be looking at cross-validation instead of the simple fit-predict in a later exercise.)

Notice that you have to reshape the MNIST X-data to be able to use the classifier. It may be a 3D array, consisting of 70000 (28 x 28) images, or just a 2D array consisting of 70000 elements of size 784.

A simple reshape() could fix this on-the-fly:

X, y = MNIST_GetDataSet()

print(f"X.shape={X.shape}") # print X.shape= (70000, 28, 28)

if X.ndim==3:

print("reshaping X..")

assert y.ndim==1

X = X.reshape((X.shape[0],X.shape[1]*X.shape[2]))

assert X.ndim==2

print(f"X.shape={X.shape}") # X.shape= (70000, 784)

Remember to use the category-5 y inputs

y_train_5 = (y_train == '5')

y_test_5 = (y_test == '5')

instead of the y's you are getting out of the dataloader. In effect, we have now created a binary-classifier, that enable us to classify a particular data sample, $\mathbf{x}(i)$ (that is a 28x28 image), as being a-class-5 or not-a-class-5.

Test your model on using the test data, and try to plot numbers that have been categorized correctly. Then also find and plots some misclassified numbers.

# TODO: add your code here..

assert False, "TODO: solve Qb, and remove me.."

Qc Implement a dummy binary classifier¶

Now we will try to create a Scikit-learn compatible estimator implemented via a python class. Follow the code found in [HOML], p.107 (for [HOML] 1st and 2nd editions: name you estimator DummyClassifier instead of Never5Classifyer).

Here our Python class knowledge comes into play. The estimator class hierarchy looks like

All Scikit-learn classifiers inherit from BaseEstimator (and possibly also ClassifierMixin), and they must have a fit-predict function pair (strangely not in the base class!) and you can actually find the sklearn.base.BaseEstimator and sklearn.base.ClassifierMixin python source code somewhere in you anaconda install dir, if you should have the nerves to go to such interesting details.

But surprisingly you may just want to implement a class that contains the fit-predict functions, without inheriting from the BaseEstimator, things still work due to the pythonic 'duck-typing': you just need to have the class implement the needed interfaces, obviously fit() and predict() but also the more obscure get_params() etc....then the class 'looks like' a BaseEstimator...and if it looks like an estimator, it is an estimator (aka. duck typing).

Templates in C++ also allow the language to use compile-time duck typing!

Call the fit-predict on a newly instantiated DummyClassifier object, and find a way to extract the accuracy score from the test data. You may implement an accuracy function yourself or just use the sklearn.metrics.accuracy_score function.

Finally, compare the accuracy score from your DummyClassifier with the scores found in [HOML] "Measuring Accuracy Using Cross-Validation", p.107. Are they comparable?

# TODO: add your code here..

assert False, "TODO: solve Qc, and remove me.."

Qd Conclusion¶

Now, conclude on all the exercise above.

Write a short textual conclusion (max. 10- to 20-lines) that extract the essence of the exercises: why did you think it was important to look at these particular ML concepts, and what was our overall learning outcome of the exercises (in broad terms).

# TODO: Qd concluding remarks in text..

REVISIONS||

---------||

2018-12-19| CEF, initial.

2018-02-06| CEF, updated and spell checked.

2018-02-08| CEF, minor text update.

2018-03-05| CEF, updated with SHN comments.

2019-09-02| CEF, updated for ITMAL v2.

2019-09-04| CEF, updated and added conclusion Q.

2020-01-25| CEF, F20 ITMAL update.

2020-02-04| CEF, updated page numbers to HOMLv2.

2020-09-03| CEF, E20 ITMAL update, udpated figs paths.

2020-09-06| CEF, added alt text.

2020-09-18| CEF, added binary-classifier text to Qb to emphasise 5/non-5 classification.

2021-01-12| CEF, F21 ITMAL update, moved revision tabel.

2021-08-02| CEF, update to E21 ITMAL.

2022-01-25| CEF, update to F22 SWMAL.

2023-02-07| CEF, update HOML page numbers.

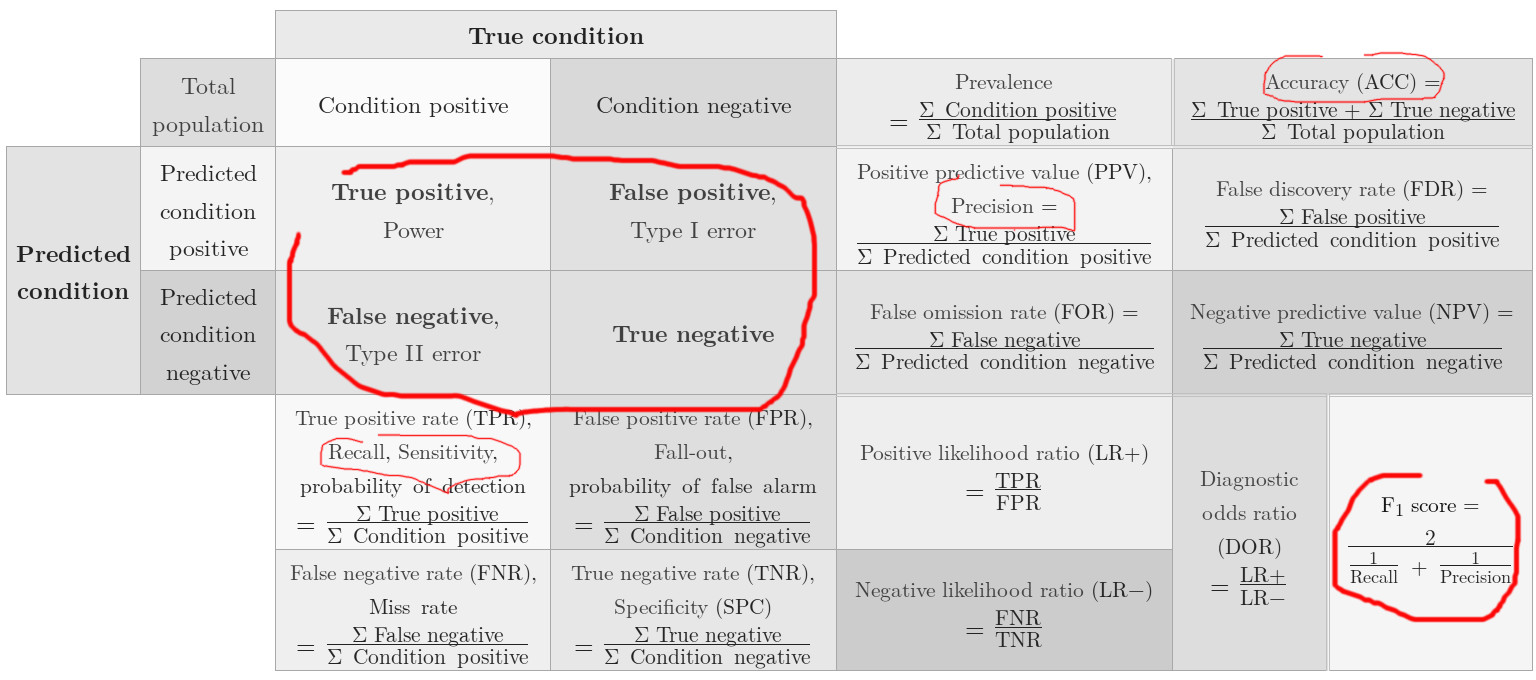

Performance Metrics¶

There are a number of frequently uses metrics in ML, namely accuracy, precision, recall and the $F_1$ score. All are called metrics (though they are not true norms, like ${\cal L}_2$ or ${\cal L}_1$ we saw last time).

Maybe performance score would be a better name than performance metric, at least for the accuracy, precision, recall we will be looking at---emphasising the conceptual distinction between the score-function and cost(/loss/error/objective)-function (the later is typically a true distance/norm function).

You can find a lot of details on say precision and recall in Wikipedia

Nomenclature¶

| NAME | SYMBOL | ALIAS |

|---|---|---|

| true positives | $TP$ | |

| true negatives | $TN$ | |

| false positives | $FP$ | type I error |

| false negatives | $FN$ | type II error |

and $N = N_P + N_N$ being the total number of samples and the number of positive and negative samples respectively.

Precision¶

$$ \def\by{\mathbf{y}} \def\ba{\begin{array}{lll}} \def\ea{\end{array}} \newcommand{\rem}[1]{} \newcommand\st[1]{_{\scriptsize #1}} \newcommand\myfrac[2]{\frac{#1\rule{0pt}{8pt}}{#2\rule{0pt}{8pt}}} \ba p &= \myfrac{TP}{TP + FP} \ea $$

Recall or Sensitivity¶

$$ \ba r &= \myfrac{TP}{TP + FN}\\ &= \myfrac{TP}{N_P} \ea $$

Accuracy¶

$$ \ba a &= \myfrac{TP + TN}{TP + TN + FP + FN}\\ &= \myfrac{TP + TN}{N}\\ &= \myfrac{TP + TN}{N_P~~ + N_N} \ea $$

Accuracy Paradox¶

A static constant model, say $p\st{cancer}=0$ may have higher accuracy than a real model with predictive power. This is odd!

Asymmetric weights could also be associated with the false positive and false negative predictions, yielding either FP of FN much more expensive than the other. Say, it is more expensive not to treat a person with cancer, than treating a person without cancer.

F-score¶

General $\beta$-harmonic mean of the precision and recall $$ F_\beta = (1+\beta^2) \myfrac{pr}{\beta^2 p+r}\\ $$ that for say $\beta=2$ or $\beta=0.5$ shifts or skews the emphasis on the two variables in the equation. Normally only the $\beta=1$ harmonic mean is used

$$ \ba F_1 &= \myfrac{2pr}{p+r}\\ &= \myfrac{2}{1/p + 1/r} \ea $$ with $F$ typically being synonymous with $F_1$.

If needed, find more info on Wikipedia

Confusion Matrix¶

For statistical classification, the confusion matrix or error matrix (or matching matrix in unsupervised learning) is for a two-class problem given by the $2\times2$ matrix with dimensions 'actual' and 'predicted'

$$ {\bf M}\st{confusion} = \begin{array}{l|ll} & \mbox{actual true} & \mbox{actual false} \\ \hline \mbox{predicted true} & TP & FP \\ \mbox{predicted false} & FN & TN \end{array} $$

The diagonal, in the square matrix, represent predicted values being the same as the actual values, off-diagonal elements represent erroneous prediction.

Also notice, that the layout of this matrix is different of what is given in [HOML], "Confusion Matrix", p.110/fig 3-3. This is just a minor issue, since we can always flip/rotate/transpose the matrix (say by flipping the $\by\st{true}$ and $\by\st{pred}$ arguments).

For N-class classification the matrix gives a matrix with $N$ actual classes and $N$ predicted classes

$$ {\bf M}\st{confusion}~~~ = \left[ \begin{array}{llll} c_{11} & c_{12} & \cdots & c_{1n} \\ c_{21} & c_{22} & \cdots & c_{2n} \\ \vdots & \vdots & \ddots & \vdots \\ c_{n1} & c_{n2} & \cdots & c_{nn} \\ \end{array} \right] $$ with say element $c_{21}$ being the number of actual classes '1' being predicted (erroneously) as class '2'.

Nomenclature for the Confusion Matrix¶

The naming of the elements in the confusion matrix can be rather exotic, like false omission rate (see the figure below), but we won't get to such detail here...let us stick with TP, TN, FP, FN and $F_1$!

If you need more info on the confusion matrix:

Qa Implement the Accuracy function and test it on the MNIST data.¶

We now follow the convention in Scikit-learn, that a score funtion takes the arguments y_true and then y_pred

sklearn.metrics.accuracy_score(y_true, y_pred, ..)

Implement a general accuracy function MyAccuracy(y_true, y_pred). Again, implement the function you self from scratch, i.e. do not use any helper functions from Scikit-learn (implementing via sklearn.metrics.confusion_matrix is also not allowed, othewise you will then learn nothing!)

Reuse your MNIST data loader and test the MyAccuracy function both on your dummy classifier and on the Stochastic Gradient Descent classifier (with setup parameters as in [HOML]).

Compare your accuracy score with the acutal value from sklearn.metrics.accuracy_score().

(Implementation note: what do you do, if the denominator is zero?)

# TODO: Qa...

def MyAccuracy(y_true, y_pred):

# TODO: you impl here

assert False, "TODO: solve Qa, and remove me.."

# TEST FUNCTION: example of a comperator, using Scikit-learn accuracy_score

#def TestAccuracy(y_true, y_pred):

# a0=MyAccuracy(y_true, y_pred)

# a1=accuracy_score(y_true, y_pred)

#

# print(f"\nmy a ={a0}")

# print(f"scikit-learn a={a1}")

#

# # do some numerical comparison here, like

# # if fabs(a0-a1)<eps then ..

Qb Implement Precision, Recall and $F_1$-score and test it on the MNIST data for both the SGD and Dummy classifier models¶

Now, implement the MyPrecision, MyRecall and MyF1Score functions, again taking MNIST as input, using the SGD and the Dummy classifiers and make some test vectors to compare to the functions found in Scikit-learn...

(Implementation note: as before, what do you do, if the denominator is zero?)

# TODO: Qb..

def MyPrecision(y_true, y_pred):

# TODO: you impl here

assert False, "TODO: solve Qb, and remove me.."

def MyRecall(y_true, y_pred):

# TODO: you impl here

assert False, "TODO: solve Qb, and remove me.."

def MyF1Score(y_true, y_pred):

# TODO: you impl here

assert False, "TODO: solve Qb, and remove me.."

# TODO: your test code here!

Qc The Confusion Matrix¶

Revisit your solution to Qb in the dummy_classifier.ipynb. Generate the confusion matrix for both the Dummy and the SGD classifier using the scklearn.metrics.confusion_matrix function.

I got the two confusion matrices

M_dummy=[[18166 0]

[ 1834 0]]

M_SDG=[[17618 548]

[ 267 1567]]

your data may look similar (but not 100% equal).

How are the Scikit-learn confusion matrix organized, where are the TP, FP, FN and TN located in the matrix indices, and what happens if you mess up the parameters calling

confusion_matrix(y_test_5_pred, y_test5)

instead of

confusion_matrix(y_test_5, y_test_5_pred)

# TODO: Qc

assert False, "TODO: solve Qc, and remove me..

Qd A Confusion Matrix Heat-map¶

Generate a heat map image for the confusion matrices, M_dummy and M_SGD respectively, getting inspiration from [HOML] "Error Analysis", pp.122-125.

This heat map could be an important guide for you when analysing multiclass data in the future.

# TODO: Qd

assert False, "TODO: solve Qd, and remove me..

Qe Conclusion¶

Now, conclude on all the exercise above.

Write a short textual conclusion (max. 10- to 20-lines) that extract the essence of the exercises: why did you think it was important to look at these particular ML concepts, and what was our overall learning outcome of the exercises (in broad terms).

# TODO: Qe concluding remarks in text..

REVISIONS|| :- | :- | 2018-12-19| CEF, initial. 2018-02-07| CEF, updated. 2018-02-07| CEF, rewritten accuracy paradox section. 2018-03-05| CEF, updated with SHN comments. 2019-09-01| CEF, updated for ITMAL v2. 2019-09-04| CEF, updated for print-f and added conclusion Q. 2020-01-25| CEF, F20 ITMAL update. 2020-02-03| CEF, minor text fixes. 2020-02-04| CEF, updated page numbers to HOMLv2. 2020-02-17| CEF, added implementation note on denominator=0. 2020-09-03| CEF, E20 ITMAL update, udpated figs paths. 2020-09-06| CEF, added alt text. 2020-09-07| CEF, updated HOML page refs. 2020-09-21| CEF, fixed factor 2 error in beta-harmonic. 2021-01-12| CEF, F21 ITMAL update, moved revision tabel. 2021-08-02| CEF, update to E21 ITMAL. 2022-01-25| CEF, update to F22 STMAL. 2023-02-07| CEF, update HOML page numbers. 2023-02-09| CEF, chagned y_train to y_test in conf. matrix call. 2023-08-30| CEF, minor table change.

Supergruppe diskussion¶

§ 2 "End-to-End Machine Learning Project" [HOML]¶

Genlæs kapitel § 2 og forbered mundtlig præsentation.

Forberedelse inden lektionen¶

Een eller flere af gruppe medlemmer forbereder et mundtligt resume af § 2:

i skal kunne give et kort mundligt resume af hele § 2 til en anden gruppe (på nær, som nævnt, Create the Workspace og Download the Data),

resume holdes til koncept-plan, dvs. prøv at genfortælle, hvad de overordnede linier er i kapitlerne i [HOML].

Lav et kort skriftlig resume af de enkelte underafsnit, ca. 5 til 20 liners tekst, se "TODO"-template herunder (MUST, til O2 aflevering).

Kapitler (incl. underkapitler):

- Look at the Big Picture,

- Get the Data,

- Explore and Visualize the Data to Gain Insights,

- Prepare the Data for Machine Learning Algorithms,

- Select and Train a Model,

- Fine-Tune Your Model,

- Launch, Monitor, and Maintain Your System,

- Try It Out!.

På klassen¶

Supergruppe [SG] resume af § 2 End-to-End, ca. 30 til 45 min.

en supergruppe [SG], sammensættes af to grupper [G], on-the-fly på klassen,

hver gruppe [G] forbereder og giver en anden gruppe [G] et mundtligt resume af § 2 til en anden gruppe,

tid: ca. 30 mim. sammenlagt, den ene grupper genfortæller første halvdel af § 2 i ca. 15 min., hvorefter den anden gruppe genfortæller resten i ca. 15 min.

Resume: Look at the Big Picture¶

TODO resume..

Resume: Get the Data¶

TODO resume..

Resume: Explore and Visualize the Data to Gain Insights,¶

TODO resume..

Resume: Prepare the Data for Machine Learning Algorithms¶

TODO resume..

Resume: Select and Train a Model¶

TODO resume..

Resume: Fine-Tune Your Model¶

TODO resume..

Resume: Launch, Monitor, and Maintain Your System¶

TODO resume..

Resume: Try It Out!.¶

TODO resume..

REVISIONS|| ---------|| 2019-01-28| CEF, initial. 2020-02-05| CEF, F20 ITMAL update. 2021-08-17| CEF, E21 ITMAL update. 2021-09-17| CEF, corrected some spell errors. 2022-01-28| CEF, update to F22 SWMAL. 2022-09-09| CEF, corrected 'MUST for O1' to 'MUST for O2' in text. 2023-02-13| CEF, updated to HOML 3rd, removed exclude subsections in 'Get the Data' in this excercise, since the parts with python environments has been removed in HOML.

SWMAL Opgave¶

Dataanalyse¶

Qa) Beskrivelse af datasæt til O4 projekt¶

I kurset er slutprojektet et bærende element, som I forventes at arbejde på igennem hele kurset sideløbende med de forskellige undervisningsemner.

I skal selv vælge et O4 projekt–det anbefales at I vælger en problemstilling, hvor der allerede er data til rådighed og en god beskrivelse af data, dataopsamlingsmetode og problemstilling.

I denne opgave skal I:

a) Give en kort konceptmæssig projektbeskrivelse af Jeres ide til O4 projekt.

b) Beskrive jeres valgte datasæt med en kort forklaring af baggrund og hvor I har fået data fra.

c) Beskrive data–dvs. hvilke features, antal samples, target værdier, evt. fejl/usikkerheder, etc.

d) Forklare hvordan I ønsker at anvende datasættet – vil I fx. bruge det til at prædiktere noget bestemt, lave en regression eller klassifikation, el.lign.

I vil nok komme til at anvende data også på andre måder i løbet af undervisningen – men det behøver I ikke nævne. Og det er også ok, hvis I ender med at bruge data på en anden måde end planlagt her.

Omfang af beskrivelsen forventes at være 1-2 sider.

Qb) Dataanalyse af eget datasæt¶

Lav data analyse på jeres egne data og projekt.

Det indebærer de sædvanlige elementer såsom plotte histogrammer, middelværdi/median/spredning, analysere for outliers/korrupte data, forslag til skalering af data og lignende former for analyse af data.

For nogle typer data (fx billed-data), hvor features ikke har en specifik betydning, er det mest histogrammer og lignende, som giver mening – det er helt o.k.

NOTE vdr. billeddatasæts¶

For billeddata fer hver pixel en feature, og alm. analyse beskrevet ovenfor giver ikke indsigt. Prøv i stedet for billeder at beskrive billedformater (JPEG, PNG osv. / RGB, HSV, gråtone, multispektral, etc.), størrelser af billeder, hvordan de er repræsenteret på disk (dirs osv.)

Giv også eksempler på billeder og evt. labels i billedesæt.

Histogrammer kan udføres på enkelte billeder, men kun i forbindelse med labelede områder---og bedst på billesæt med ens baggrunde.

Benytter i lyddata eller video gælder de samme begrænsinger som får billeder her.

NOTE vdr. valg af datasæt til O4¶

I har frie hænder til at vælge O4 projekt og tilhørende datasæt og valg af datasæt og ide til O4 her er ikke endelig.

Dvs. at i løbende kan modificere projektbeskrivelse og, evt. om nødvendigt, vælge et andet datasæt senere, hvis jeres nuværende valg viser sig umuligt (men er en dyr proces).

Scope af O4 projekt bør også begrænses, så det passer til kurset og til den 'time-box'ede aflevering.

REVISIONS|| :-|:-| 2021-08-17| CEF, moved from Word to Notebook. 2021-11-08| CEF, elaborated on image based data. 2022-01-25| CEF, update to F22 SWMAL. 2023-02-19| CEF, updated to F23 SWMAL.

Pipelines¶

We now try building af ML pipeline. The data for this exercise is the same as in L01, meaning that the OECD data from the 'intro.ipynb' have been save into a Python 'pickle' file.

The pickle library is a nifty data preservation method in Python, and from L01 the tuple (X, y) have been stored to the pickle file `itmal_l01_data.pkl', try reloading it..

%matplotlib inline

import sys

import pickle

import numpy as np

import matplotlib.pyplot as plt

from sklearn.neural_network import MLPRegressor

from sklearn.linear_model import LinearRegression

from sklearn.metrics import r2_score

def LoadDataFromL01():

filename = "Data/itmal_l01_data.pkl"

with open(f"{filename}", "rb") as f:

(X, y) = pickle.load(f)

return X, y

X, y = LoadDataFromL01()

print(f"X.shape={X.shape}, y.shape={y.shape}")

assert X.shape[0] == y.shape[0]

assert X.ndim == 2

assert y.ndim == 1 # did a y.ravel() before saving to picke file

assert X.shape[0] == 29

# re-create plot data (not stored in the Pickel file)

m = np.linspace(0, 60000, 1000)

M = np.empty([m.shape[0], 1])

M[:, 0] = m

print("OK")

Revisiting the problem with the MLP¶

Using the MLP for the QECD data in Qd) from intro.ipynb produced a negative $R^2$, meaning that it was unable to fit the data, and the MPL model was actually worse than the naive $\hat y$ (mean value of y).

Let's just revisit this fact. When running the next cell you should now see an OK $~R^2_{lin.reg}~$ score and a negative $~R^2_{mlp}~$ score..

# Setup the MLP and lin. regression again..

def isNumpyData(t: np.ndarray, expected_ndim: int):

assert isinstance(expected_ndim, int), f"input parameter 'expected_ndim' is not an integer but a '{type(expected_ndim)}'"

assert expected_ndim>=0, f"expected input parameter 'expected_ndim' to be >=0, got {expected_ndim}"

if t is None:

print("input parameter 't' is None", file=sys.stderr)

return False

if not isinstance(t, np.ndarray):

print("excepted numpy.ndarray got type '{type(t)}'", file=sys.stderr)

return False

if not t.ndim==expected_ndim:

print("expected ndim={expected_ndim} but found {t.ndim}", file=sys.stderr)

return False

return True

def PlotModels(model1, model2, X: np.ndarray, y: np.ndarray, name_model1: str, name_model2: str):

# NOTE: local function is such a nifty feature of Python!

def CalcPredAndScore(model, X: np.ndarray, y: np.ndarray,):

assert isNumpyData(X, 2) and isNumpyData(y, 1) and X.shape[0]==y.shape[0]

y_pred_model = model.predict(X)

score_model = r2_score(y, y_pred_model) # call r2

return y_pred_model, score_model

assert isinstance(name_model1, str) and isinstance(name_model2, str)

y_pred_model1, score_model1 = CalcPredAndScore(model1, X, y)

y_pred_model2, score_model2 = CalcPredAndScore(model2, X, y)

plt.plot(X, y_pred_model1, "r.-")

plt.plot(X, y_pred_model2, "kx-")

plt.scatter(X, y)

plt.xlabel("GDP per capita")

plt.ylabel("Life satisfaction")

plt.legend([name_model1, name_model2, "X OECD data"])

l = max(len(name_model1), len(name_model2))

print(f"{(name_model1).rjust(l)}.score(X, y)={score_model1:0.2f}")

print(f"{(name_model2).rjust(l)}.score(X, y)={score_model2:0.2f}")

# lets make a linear and MLP regressor and redo the plots

mlp = MLPRegressor(hidden_layer_sizes=(10, ),

solver='adam',

activation='relu',

tol=1E-5,

max_iter=100000,

verbose=False)

linreg = LinearRegression()

mlp.fit(X, y)

linreg.fit(X, y)

print("The MLP may mis-fit the data, seen in the, sometimes, bad R^2 score..\n")

PlotModels(linreg, mlp, X, y, "lin.reg", "MLP")

print("\nOK")

Qa) Create a Min/max scaler for the MLP¶

Now, the neurons in neural networks normally expect input data in the range [0;1] or sometimes in the range [-1;1], meaning that for value outside this range then the neuron will saturate to its min or max value (also typical 0 or 1).

A concrete value of X is, say 22.000 USD, that is far away from what the MLP expects. Af fix to the problem in Qd), from intro.ipynb, is to preprocess data by scaling it down to something more sensible.

Try to manually scale X to a range of [0;1], re-train the MLP, re-plot and find the new score from the rescaled input. Any better?

(If you already made exercise "Qe) Neural Network with pre-scaling" in L01, then reuse Your work here!)

# TODO: add your code here..

assert False, "TODO: rescale X and refit the model(s).."

Qb) Scikit-learn Pipelines¶

Now, rescale again, but use the sklearn.preprocessing.MinMaxScaler.

When this works put both the MLP and the scaler into a composite construction via sklearn.pipeline.Pipeline. This composite is just a new Scikit-learn estimator, and can be used just like any other fit-predict models, try it, and document it for the journal.

(You could reuse the PlotModels() function by also retraining the linear regressor on the scaled data, or just write your own plot code.)

# TODO: add your code here..

assert False, "TODO: put everything into a pipeline.."

Qc) Outliers and the Min-max Scaler vs. the Standard Scaler¶

Explain the fundamental problem with a min-max scaler and outliers.

Will a sklearn.preprocessing.StandardScaler do better here, in the case of abnormal feature values/outliers?

# TODO: research the problem here..

assert False, "TODO: investigate outlier problems and try a StandardScaler.."

Qd) Modify the MLP Hyperparameters¶

Finally, try out some of the hyperparameters associated with the MLP.

Specifically, test how few neurons the MLP can do with---still producing a sensible output, i.e. high $R^2$.

Also try-out some other activation functions, ala sigmoid, and solvers, like sgd.

Notice, that the Scikit-learn MLP does not have as many adjustable parameters, as a Keras MLP, for example, the Scikit-learn MLP misses neurons initialization parameters (p.333-334 [HOML,2nd], p.358-359 [HOML,3rd]) and the ELU activation function (p.336 [HOML,2nd], p.363 [HOML,3rd).

[OPTIONAL 1]: use a Keras MLP regressor instead of the Scikit-learn MLP (You need to install the Keras if its not installed as default).

[OPTIONAL 2]: try out the early_stopping hyperparameter on the MLPRegressor.

[OPTIONAL 3]: try putting all score-calculations into K-fold cross-validation methods readily available in Scikit-learn using

sklearn.model_selection.cross_val_predictsklearn.model_selection.cross_val_score

or similar (this is, in theory, the correct method, but can be hard to use due to the extremely small number of data points, n=29).

# TODO: add your code here..

assert False, "TODO: test out various hyperparameters for the MLP.."

REVISIONS|| :-|:-| 2020-10-15| CEF, initial. 2020-10-21| CEF, added Standard Scaler Q. 2020-11-17| CEF, removed orhpant text in Qa (moded to Qc). 2021-02-10| CEF, updated for ITMAL F21. 2021-11-08| CEF, updated print info. 2021-02-10| CEF, updated for SWMAL F22. 2023-02-19| CEF, updated for SWMAL F23, adjuste page numbers for 3rd.ed. 2023-02-21| CEF, added types, rewrote CalcPredAndScore and added isNumpyData.

Training a Linear Regressor I¶

The goal of the linear regression is to find the argument $w$ that minimizes the sum-of-squares error over all inputs.

Given the usual ML input data matrix $\mathbf X$ of size $(n,d)$ where each row is an input column vector $(\mathbf{x}^{(i)})^\top$ data sample of size $d$

$$ \renewcommand\rem[1]{} \rem{ITMAL: CEF def and LaTeX commands, remember: no newlines in defs} \renewcommand\eq[2]{#1 &=& #2\\} \renewcommand\ar[2]{\begin{array}{#1}#2\end{array}} \renewcommand\ac[2]{\left[\ar{#1}{#2}\right]} \renewcommand\st[1]{_{\textrm{\scriptsize #1}}} \renewcommand\norm[1]{{\cal L}_{#1}} \renewcommand\obs[2]{#1_{\textrm{\scriptsize obs}}^{\left(#2\right)}} \renewcommand\diff[1]{\mathrm{d}#1} \renewcommand\pown[1]{^{(#1)}} \def\pownn{\pown{n}} \def\powni{\pown{i}} \def\powtest{\pown{\textrm{\scriptsize test}}} \def\powtrain{\pown{\textrm{\scriptsize train}}} \def\bX{\mathbf{M}} \def\bX{\mathbf{X}} \def\bZ{\mathbf{Z}} \def\bw{\mathbf{m}} \def\bx{\mathbf{x}} \def\by{\mathbf{y}} \def\bz{\mathbf{z}} \def\bw{\mathbf{w}} \def\btheta{{\boldsymbol\theta}} \def\bSigma{{\boldsymbol\Sigma}} \def\half{\frac{1}{2}} \renewcommand\pfrac[2]{\frac{\partial~#1}{\partial~#2}} \renewcommand\dfrac[2]{\frac{\mathrm{d}~#1}{\mathrm{d}#2}} \bX = \ac{cccc}{ x_1\pown{1} & x_2\pown{1} & \cdots & x_d\pown{1} \\ x_1\pown{2} & x_2\pown{2} & \cdots & x_d\pown{2}\\ \vdots & & & \vdots \\ x_1\pownn & x_2\pownn & \cdots & x_d\pownn\\ } $$

and $\by$ is the target output column vector of size $n$

$$ \by = \ac{c}{ y\pown{1} \\ y\pown{2} \\ \vdots \\ y\pown{n} \\ } $$

The linear regression model, via its hypothesis function and for a column vector input $\bx\powni$ of size $d$ and a column weight vector $\bw$ of size $d+1$ (with the additional element $w_0$ being the bias), can now be written as simple as

$$ \ar{rl}{ h(\bx\powni;\bw) &= \bw^\top \ac{c}{1\\\bx\powni} \\ &= w_0 + w_1 x_1\powni + w_2 x_2\powni + \cdots + w_d x_d\powni } $$

using the model parameters or weights, $\bw$, aka $\btheta$. To ease notation $\bx$ is assumed to have the 1 element prepended in the following so that $\bx$ is a $d+1$ column vector

$$ \ar{rl}{ \ac{c}{1\\\bx\powni} &\mapsto \bx\powni, ~~~~\textrm{by convention in the following...}\\ h(\bx\powni;\bw) &= \bw^\top \bx\powni } $$

This is actually the first fully white-box machine learning algorithm, that we see. All the glory details of the algorithm are clearly visible in the internal vector multiplication...quite simple, right? Now we just need to train the weights...

Loss or Objective Function - Formulation for Linear Regression¶

The individual cost (or loss), $L\powni$, for a single input-vector $\bx\powni$ is a measure of how the model is able to fit the data: the higher the $L\powni$ value the worse it is able to fit. A loss of $L=0$ means a perfect fit.

It can be given by, say, the square difference from the calculated output, $h$, to the desired output, $y$

$$ \ar{rl}{ L\powni &= || h(\bx\powni;\bw) - y\powni ||_2^2\\ &= || \bw^\top\bx\powni - y\powni ||_2^2\\ &= \left( \bw^\top\bx\powni - y\powni \right)^2 } $$ when $L$ is based on the $\norm{2}^2$ norm, and only when $y$ is in one dimension.

To minimize all the $L\powni$ losses (or indirectly also the MSE or RMSE) is to minimize the sum of all the individual costs, via the total cost function $J$

$$ \ar{rl}{ \textrm{MSE}(\bX,\by;\bw) &= \frac{1}{n} \sum_{i=1}^{n} L\powni \\ &= \frac{1}{n} \sum_{i=1}^{n} \left( \bw^\top\bx\powni - y\powni \right)^2\\ &= \frac{1}{n} ||\bX \bw - \by||_2^2 } $$

here using the squared Euclidean norm, $\norm{2}^2$, via the $||\cdot||_2^2$ expressions.

Now the factor $\frac{1}{n}$ is just a constant and can be ignored, yielding the total cost function

$$ \ar{rl}{ J &= \frac{1}{2} ||\bX \bw - \by||_2^2\\ &\propto \textrm{MSE} } $$

adding yet another constant, 1/2, to ease later differentiation of $J$.

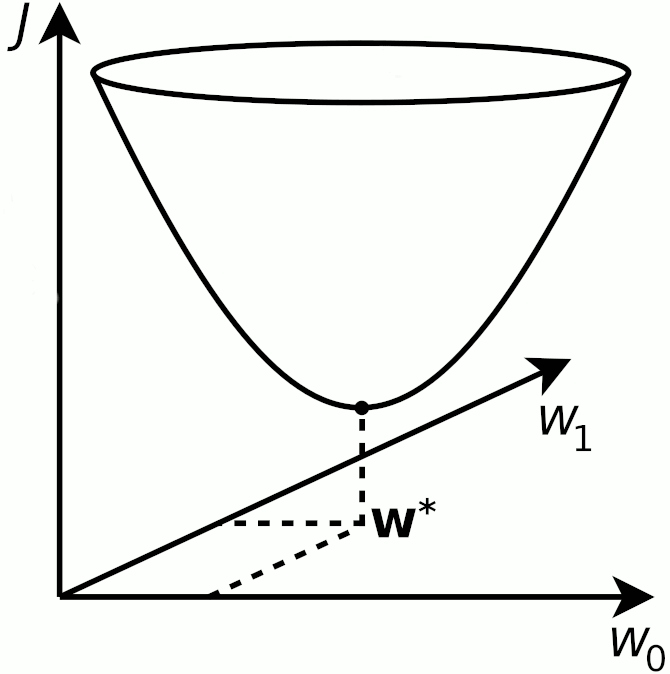

Training¶

Training the linear regression model now amounts to computing the optimal value of the $\bw$ weight; that is finding the $\bw$-value that minimizes the total cost

$$ \bw^* = \textrm{argmin}_\bw~J\\ $$

where $\textrm{argmin}_\bw$ means find the argument of $\bw$ that minimizes the $J$ function. This minimum (sometimes a maximum, via argmax) is denoted $\bw^*$ in most ML literature.

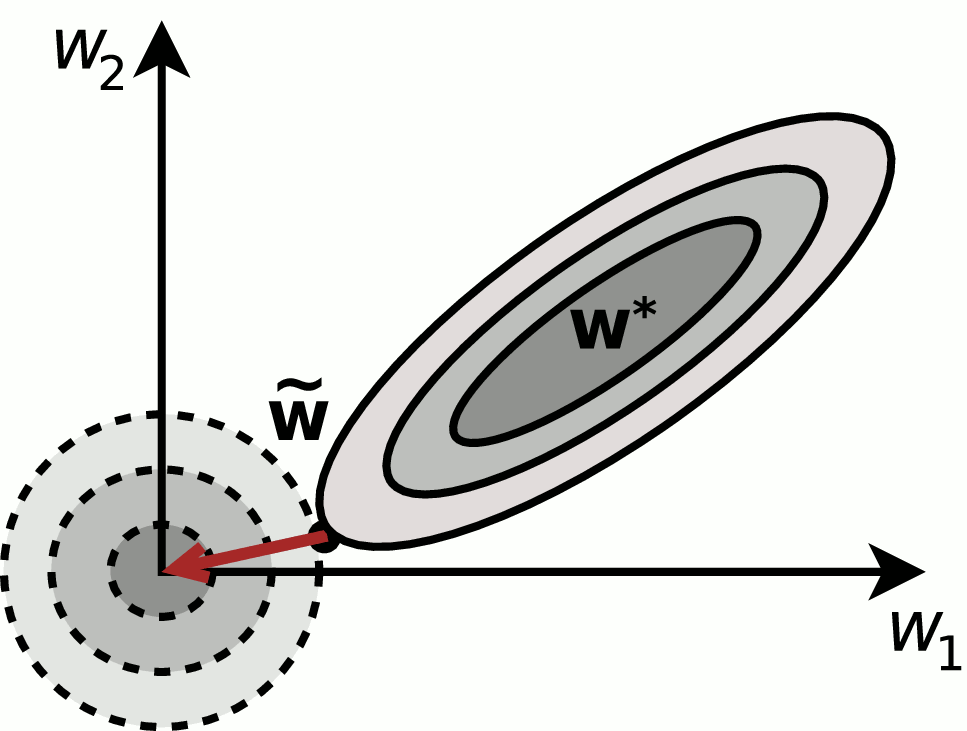

The minimization can in 2-D visually be drawn as finding the lowest $J$ that for linear regression always form a convex shape

Training: The Closed-form Solution¶

To solve for $\bw^*$ in closed form (i.e. directly, without any numerical approximation), we find the gradient of $J$ with respect to $\bw$. Taking the partial deriverty $\partial/\partial_\bw$ of the $J$ via the gradient (nabla) operator

$$ \rem{ \frac{\partial}{\partial \bw} = \ac{c}{ \frac{\partial}{\partial w_1} \\ \frac{\partial}{\partial w_2} \\ \vdots\\ \frac{\partial}{\partial w_d} } } \nabla_\bw~J = \left[ \frac{\partial J}{\partial w_1}, \frac{\partial J}{\partial w_2}, \ldots , \frac{\partial J}{\partial w_m} \right]^\top $$ and setting it to zero yields the optimal solution for $\bw$, and ignoring all constant factors of 1/2 and $1/n$

$$ \ar{rl}{ \nabla_\bw J(\bw) &= \bX^\top \left( \bX \bw - \by \right) ~=~ 0\\ 0 &= \bX^\top\bX \bw - \bX^\top\by } $$

giving the closed-form solution, with $\by = [y\pown{1}, y\pown{2}, \cdots, y\pown{n}]^\top$

$$ \bw^* ~=~ \left( \bX^\top \bX \right)^{-1} \bX^\top \by $$

You already know this method from math, finding the extrema for a function, say

$$f(w)=w^2-2w-2$$

so is given by finding the place where the gradient $\mathrm{d}~f(w)/\mathrm{d}w = 0$

$$ \dfrac{f(w)}{w} = 2w -2 = 0 $$

so we see that there is an extremum at $w=1$. Checking the second deriverty tells if we are seeing a minimum, maximum or a saddlepoint at that point. In matrix terms, this corresponds to finding the Hessian matrix and gets notational tricky due to the multiple feature dimensions involved.

Qa Write a Python function that uses the closed-form to find $\bw^*$¶

Use the test data, X and y in the code below to find w via the closed-form. Use the test vectors for w to test your implementation, and remember to add the bias term (concat an all-one vector to X before solving).

# TODO: Qa...

# TEST DATA:

import numpy as np

from libitmal import utils as itmalutils

def GenerateData():

X = np.array([[8.34044009e-01],[1.44064899e+00],[2.28749635e-04],[6.04665145e-01]])

y = np.array([5.97396028, 7.24897834, 4.86609388, 3.51245674])

return X, y

X, y = GenerateData()

assert False, "find the least-square solution for X and y, your implementation here, say from [HOML, p.114 2nd./p.134 3rd.]"

# w = ...

# TEST VECTOR:

w_expected = np.array([4.046879011698, 1.880121487278])

itmalutils.PrintMatrix(w, label="w=", precision=12)

itmalutils.AssertInRange(w, w_expected, eps=1E-9)

print("OK")

Qb Find the limits of the least-square method¶

Again find the least-square optimal value for w now using then new X and y as inputs.

Describe the problem with the matrix inverse, and for what M and N combinations do you see, that calculation of the matrix inverse takes up long time?

# TODO: Qb...

def GenerateData(M, N):

# TEST DATA: Matrix, taken from [HOML]

print(f'GenerateData(M={N}, N={N})...')

assert M>0

assert N>0

assert isinstance(M, int)

assert isinstance(N, int)

# NOTE: not always possible to invert a random matrix;

# it becomes sigular, hence a more elaborate choice

# of values below (but still a hack):

X=2 * np.ones([M, N])

for i in range(X.shape[0]):

X[i,0]=i*4

for j in range(X.shape[1]):

X[0,j]=-j*4

y=4 + 3*X + np.random.randn(M,1)

y=y[:,0] # well, could do better here!

return X, y

X, y = GenerateData(M=10000, N=20)

assert False, "find the least-square solution for X and y, again"

# w =

print("OK")

REVISIONS|| :- | :- | 2018-12-18| CEF, initial. 2018-02-14| CEF, major update. 2018-02-18| CEF, fixed error in nabla expression. 2018-02-18| CEF, added minimization plot. 2018-02-18| CEF, added note on argmin/max. 2018-02-18| CEF, changed concave to convex. 2021-09-26| CEF, update for ITMAL E21. 2011-10-02| CEF, corrected page numbers for HOML v2 (109=>114). 2022-03-09| CEF, elaboreted on code and introduced GetData(). 2023-02-22| CEF, updated page no to HOML 3rd. ed., updated to SWMAL F23. 2023-09-19| CEF, changed LaTeX mbox and newcommand (VSCode error) to textrm/mathrm and renewcommand. 2023-09-28| CEF, elaborated on L-expressions from (..)^2 to || .. ||_2^2.

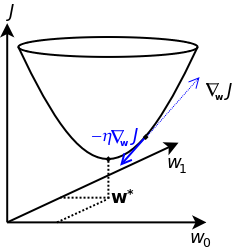

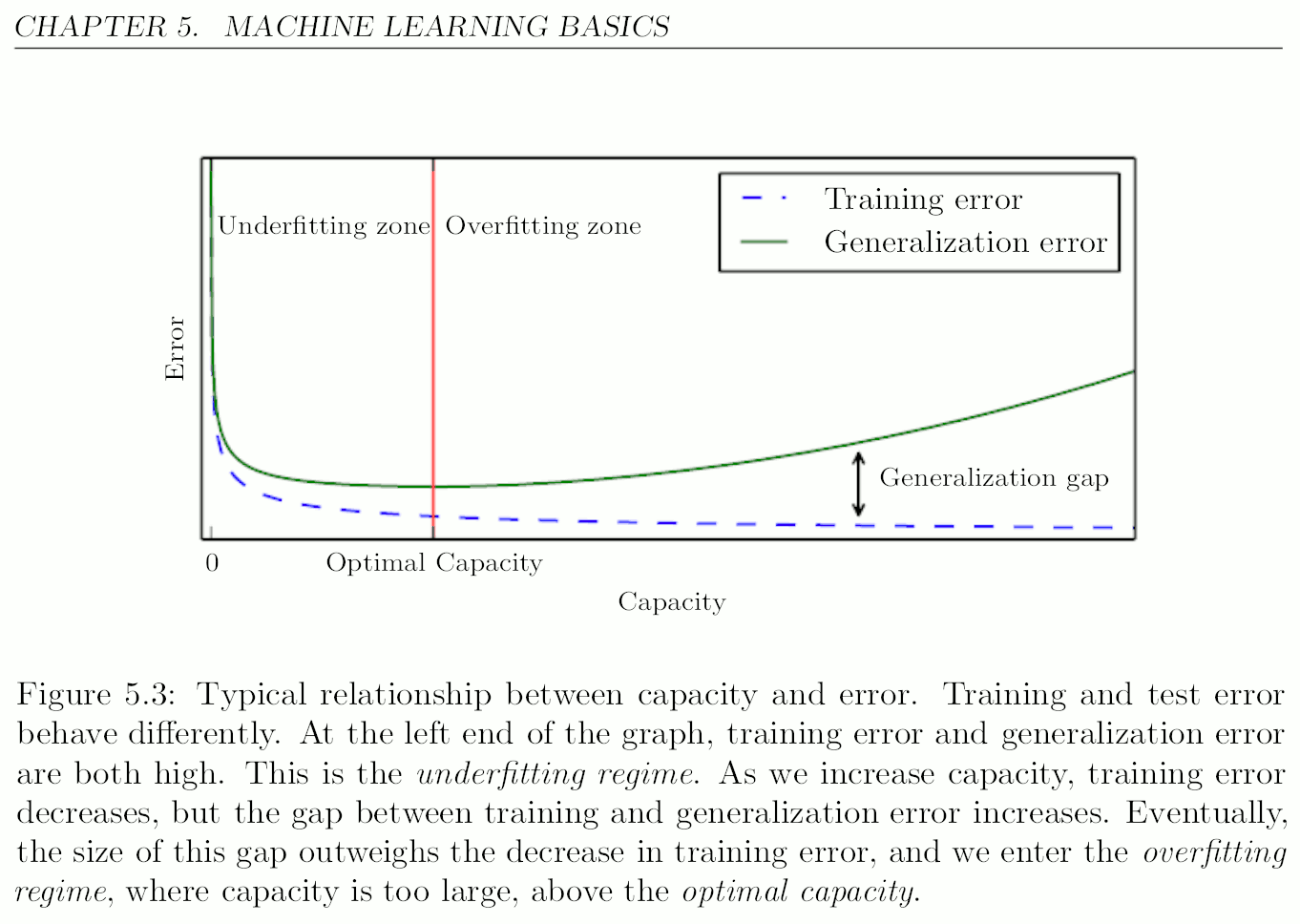

Gradient Descent Methods and Training¶

Finding the optimal solution in one-step, via

$$ \renewcommand\rem[1]{} \rem{ITMAL: CEF def and LaTeX commands, remember: no newlines in defs} \renewcommand\eq[2]{#1 &=& #2\\} \renewcommand\ar[2]{\begin{array}{#1}#2\end{array}} \renewcommand\ac[2]{\left[\ar{#1}{#2}\right]} \renewcommand\st[1]{_{\textrm{\scriptsize #1}}} \renewcommand\norm[1]{{\cal L}_{#1}} \renewcommand\obs[2]{#1_{\textrm{\scriptsize obs}}^{\left(#2\right)}} \renewcommand\diff[1]{\mathrm{d}#1} \renewcommand\pown[1]{^{(#1)}} \def\pownn{\pown{n}} \def\powni{\pown{i}} \def\powtest{\pown{\textrm{\scriptsize test}}} \def\powtrain{\pown{\textrm{\scriptsize train}}} \def\bX{\mathbf{M}} \def\bX{\mathbf{X}} \def\bZ{\mathbf{Z}} \def\bw{\mathbf{m}} \def\bx{\mathbf{x}} \def\by{\mathbf{y}} \def\bz{\mathbf{z}} \def\bw{\mathbf{w}} \def\btheta{{\boldsymbol\theta}} \def\bSigma{{\boldsymbol\Sigma}} \def\half{\frac{1}{2}} \renewcommand\pfrac[2]{\frac{\partial~#1}{\partial~#2}} \renewcommand\dfrac[2]{\frac{\mathrm{d}~#1}{\mathrm{d}#2}} \bw^* ~=~ \left( \bX^\top \bX \right)^{-1} \bX^\top \by $$

has its downsides: the scaling problem of the matrix inverse. Now, let us look at a numerical solution to the problem of finding the value of $\bw$ (aka $\btheta$) that minimizes the objective function $J$.

Again, ideally we just want to find places, where the (multi-dimensionally) gradient of $J$ is zero (here using a constant factor $\frac{2}{m}$)

$$ \ar{rl}{ \nabla_\bw J(\bw) &= \frac{2}{m} \bX^\top \left( \bX \bw - \by \right)\\ } $$

and numerically we calculate $\nabla_{\bw} J$ for a point in $\bw$-space, and then move along in the opposite direction of this gradient, taking a step of size $\eta$

$$ \bw^{(step~N+1)} = \bw^{(step~N)} - \eta \nabla_{\bw} J(\bw) $$

That's it, pretty simple, right (apart from numerical stability, problem with convergence and regularization, that we will discuss later).

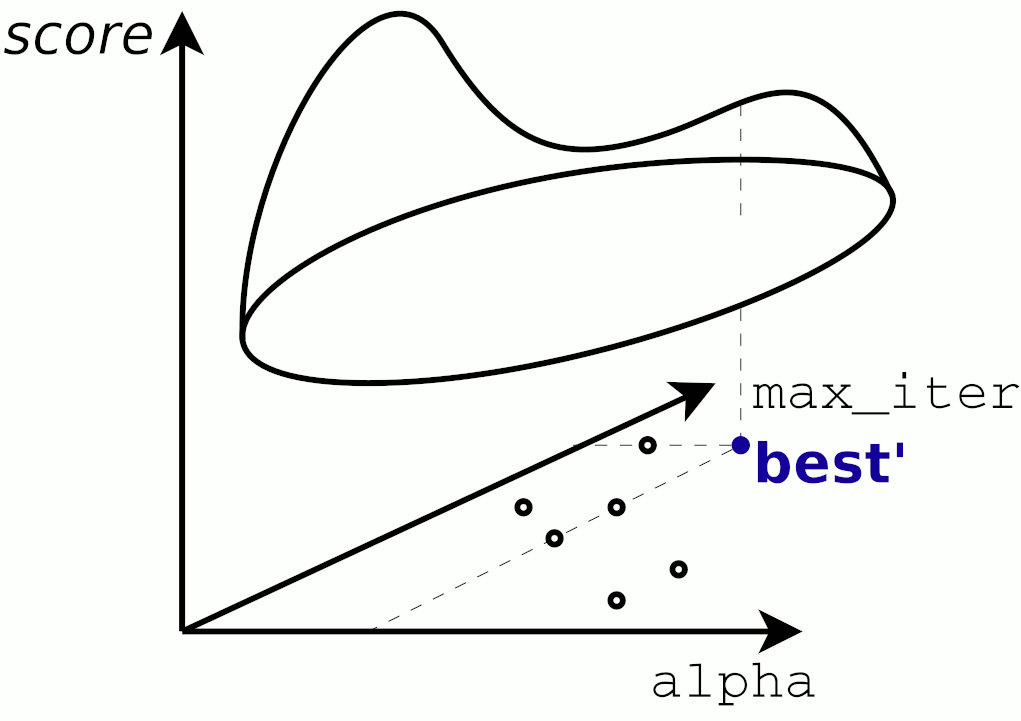

So, we begin with some initial $\bw$, and iterate via the equation above, towards places, where $J$ is smaller, and this can be illustrated as

If we hit the/a global minimum or just a local minimum (or in extremely rare cases a local saddle point) is another question when not using a simple linear regression model: for non-linear models we will in general not see a nice convex $J$-$\bw$ surface, as in the figure above.

Qa The Gradient Descent Method (GD)¶

Explain the gradient descent algorithm using the equations in the section '§ Bach Gradient Descent' [HOML, p.121-122 2nd, p.143 3rd], and relate it to the code snippet

X_b, y = GenerateData()

eta = 0.1

n_iterations = 1000

m = 100

theta = np.random.randn(2,1)

for iteration in range(n_iterations):

gradients = 2/m * X_b.T.dot(X_b.dot(theta) - y)

theta = theta - eta * gradients

in the python code below.

As usual, avoid going top much into details of the code that does the plotting.

What role does eta play, and what happens if you increase/decrease it (explain the three plots)?

# TODO: Qa...examine the method (without the plotting)

# NOTE: modified code from [GITHOML], 04_training_linear_models.ipynb

%matplotlib inline

import matplotlib as mpl

import matplotlib.pyplot as plt

import numpy as np

from sklearn.linear_model import LinearRegression

def GenerateData():

X = 2 * np.random.rand(100, 1)

y = 4 + 3 * X + np.random.randn(100, 1)

X_b = np.c_[np.ones((100, 1)), X] # add x0 = 1 to each instance

return X, X_b, y

X, X_b, y = GenerateData()

eta = 0.1

n_iterations = 1000

m = 100

theta = np.random.randn(2,1)

for iteration in range(n_iterations):

gradients = 2/m * X_b.T.dot(X_b.dot(theta) - y)

theta = theta - eta * gradients

print(f'stochastic gradient descent theta={theta.ravel()}')

#############################

# rest of the code is just for plotting, needs no review

def plot_gradient_descent(theta, eta, theta_path=None):

m = len(X_b)

plt.plot(X, y, "b.")

n_iterations = 1000

for iteration in range(n_iterations):

if iteration < 10:

y_predict = X_new_b.dot(theta)

style = "b-" if iteration > 0 else "r--"

plt.plot(X_new, y_predict, style)

gradients = 2/m * X_b.T.dot(X_b.dot(theta) - y)

theta = theta - eta * gradients

if theta_path is not None:

theta_path.append(theta)

plt.xlabel("$x_1$", fontsize=18)

plt.axis([0, 2, 0, 15])

plt.title(r"$\eta = {}$".format(eta), fontsize=16)

np.random.seed(42)

theta_path_bgd = []

theta = np.random.randn(2,1) # random initialization

X_new = np.array([[0], [2]])

X_new_b = np.c_[np.ones((2, 1)), X_new] # add x0 = 1 to each instance

plt.figure(figsize=(10,4))

plt.subplot(131); plot_gradient_descent(theta, eta=0.02)

plt.ylabel("$y$", rotation=0, fontsize=18)

plt.subplot(132); plot_gradient_descent(theta, eta=0.1, theta_path=theta_path_bgd)

plt.subplot(133); plot_gradient_descent(theta, eta=0.5)

plt.show()

print('OK')